Last Updated on January 28, 2026 by DarkNet

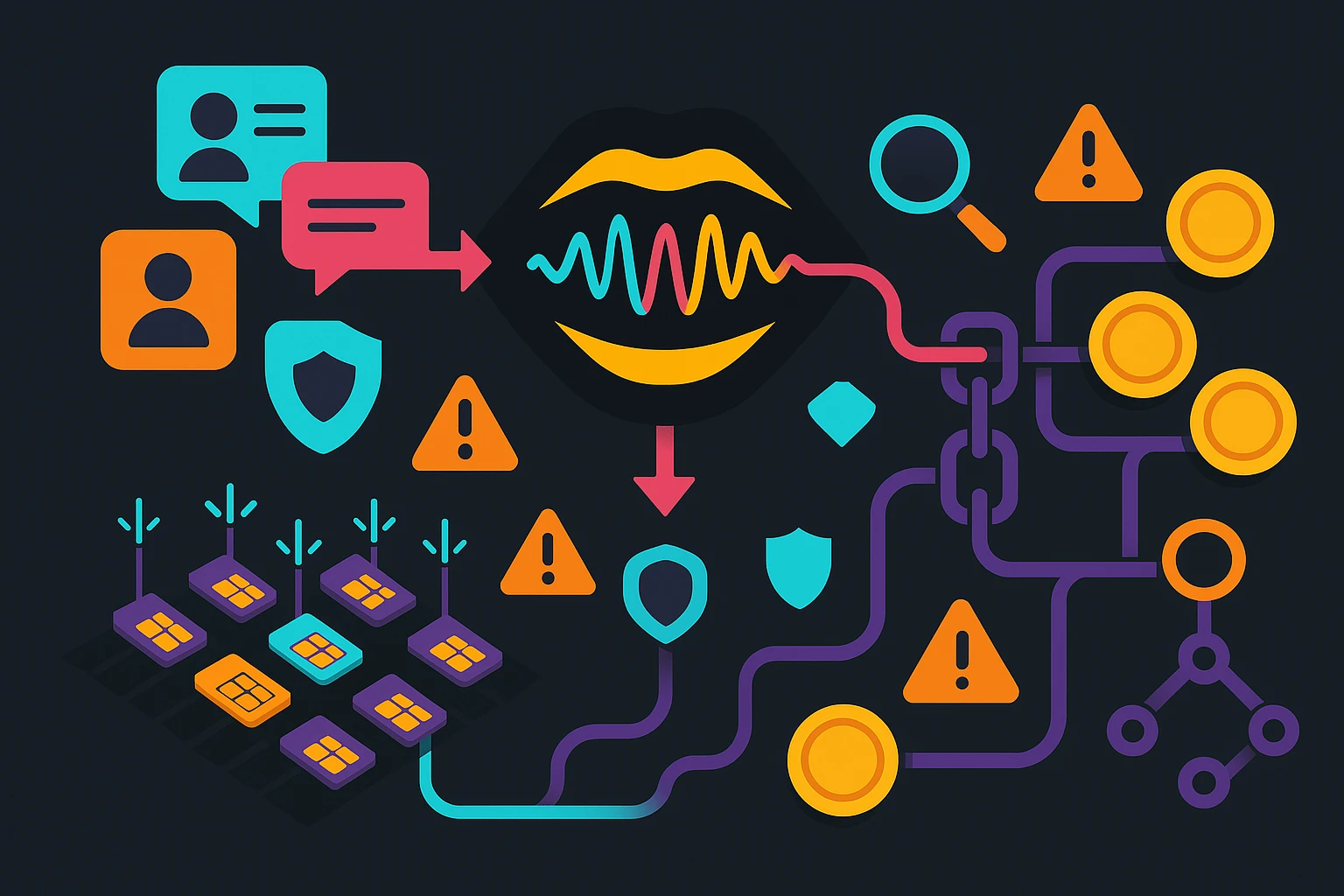

Pig-butchering scams now run like digital call centers: voice AI for pressure, SIM farms for reach, and crypto rails for fast cash-out. Here’s how they scale—and how to spot and stop them.

What “Pig Butchering” Looks Like in 2026 (and Why It Keeps Evolving)

From grooming to extraction: the 4-phase pattern

Pig-butchering scams follow a playbook that looks personal but is industrial at scale. The typical sequence in 2026 is:

- Grooming: An unsolicited approach—often a “wrong number,” dating app match, or “mentor” outreach—followed by friendly daily chat to build routine.

- Trust-building: Photos, voice notes, and low-stakes favors increase rapport. Scammers may mirror interests and time zones, or use AI to personalize tone.

- Investment lure: A “once-in-a-lifetime” opportunity, usually crypto or FX, presented as safe and exclusive. Early “proof” may be faked dashboards or staged withdrawals.

- Extraction: Escalating deposits, time pressure, then stonewalling. If victims resist, threats or impersonation may follow; if they pay, accounts get locked.

The emotional arc is deliberate: establish intimacy, project authority, then compress decision time. When one pretext fails, another appears.

Industrialized operations at scale

Behind the chat is a production line. Teams handle lead generation, grooming, escalation, and “cash-out.” AI assists with drafting, translation, and scheduling across time zones. SIM farms provide phone numbers in bulk. Crypto wallets are pre-staged to receive and move funds. The sophistication is less about genius and more about process: dynamic scripts, role-based access, and rate-limited automation to stay below platform thresholds.

Who gets targeted in 2026

Targeting has broadened. While romance angles remain common, 2026 shows more “mentor” and “business insider” pitches aimed at professionals, retirees, and crypto-curious users. Scammers often match the victim’s platform: dating apps for romance pretexts; professional networks for mentorship; messaging apps for daily rapport. Regional holidays, market volatility, and news events are exploited to time the ask.

The New Digital Call Center Stack: From Chat Scripts to Full Omnichannel Funnels

CRM-style pipelines without the morality

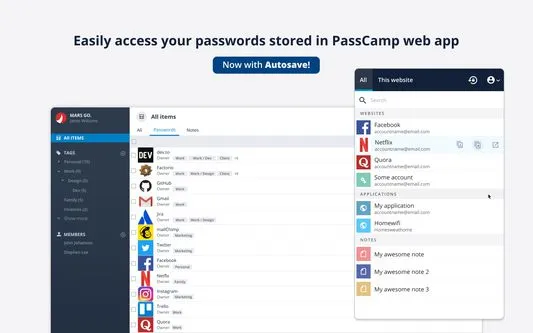

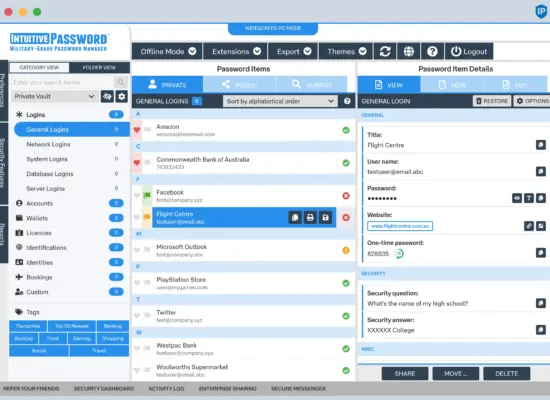

Modern operations behave like sales teams. Each target sits in a pipeline with tags (risk appetite, time since last deposit, objections), task queues (send check-in, share “profit” screenshot), and escalation paths (introduce “analyst,” switch to voice, schedule a video check-in). Managers review conversion metrics and adjust scripts—not to inform, but to maximize deposits.

Channel handoffs that build pressure

Handoffs are planned. A first contact on a dating app moves to an encrypted messenger “for privacy.” When stakes grow, voice calls or video chats add credibility. If platforms flag accounts, the persona reappears on another app or phone number. Each step increases psychological commitment while fragmenting evidence across services.

Automated personas and content engines

Personas are prebuilt with backstories, photosets, and schedules. Generative tools draft localized messages and produce “performance charts.” Translation layers make operators globally interchangeable. None of this requires cutting-edge technology—just consistency and a feedback loop that rewards tactics that drive deposits.

Voice AI in Scam Operations: Cloning, Real-Time Voice Conversion, and Deepfake Audio

Authority spoofing and emotional leverage

Voice AI now supports real-time conversion and cloned voices. Scammers commonly use it for:

- Authority spoofing: “Bank security,” “exchange compliance,” or “senior analyst” voices that sound older, confident, and local.

- Relationship reinforcement: Warm voice notes to personalize daily contact; occasional video with tight framing to hide lip-sync issues.

- Pressure moments: Live calls during “market windows” or “compliance checks” to force decisions.

Audio deepfakes remain imperfect, but the bar for “good enough” is low when emotions run high.

Detection limits and watermarking state

Most consumer-grade deepfake detection is unreliable in real time. Watermarking for synthetic speech is emerging, but adoption is patchy and cross-platform standards are limited. Call-quality artifacts (latency, noise gating, odd prosody) are signals, not proof. Treat unexpected urgency as the primary risk indicator, not any single artifact.

Verification tactics that work in practice

- Use out-of-band callbacks to a known, official number (from an official website, not a message thread).

- Ask for slow, specific details you can independently verify. Real institutions won’t resist verification.

- Refuse any “verification” that requires installing remote tools, sharing codes, or moving funds “for safekeeping.”

- When in doubt, slow down. Scams depend on compressed timelines.

SIM Farms and Bulk Telephony: Number Rotation, OTP Interception, and Geo-Masking

Why massive number pools matter

SIM farms—device racks hosting many phone lines—let operators cycle numbers rapidly. Rotation reduces block rates, sustains outreach campaigns, and supports impersonation by presenting local area codes. Geo-masking routes traffic through regions that match the target’s location, strengthening the illusion of proximity.

Where OTP and account-takeover risk overlaps

Some operations intersect with account-abuse ecosystems that seek one-time passwords (OTPs) or verification codes. That overlap increases risk to victims asked to “verify” accounts or share screens. This article does not detail methods; the key defense is simple: never share authentication codes or install remote-control software at someone else’s request.

Signals carriers and apps can use

- Velocity anomalies: High outbound SMS/call rates per line or per device cluster.

- Identifier mismatch: Reused device fingerprints across many numbers; unusual SIM swap patterns.

- Routing irregularities: Frequent shifts in origination country/ASN; inconsistent caller ID attestation.

- Complaints correlation: Linking user reports to specific ranges; rapid reappearance of similar traffic post-block.

Standards like STIR/SHAKEN and robust analytics help, but threat actors adapt. Data-sharing and rapid takedown workflows across carriers and apps remain essential.

Traffic Sources and Lead Capture: Social Engineering Funnels Across Apps and Ads

Ad buys and lead lists

Traffic often starts with paid ads, scraped contact lists, or purchased leads. Operators test creatives that promise mentorship, “exclusive” signals, or lifestyle status. When platforms remove campaigns, variants appear with new accounts and domains. The objective is not a single viral hit but a steady stream of targets.

Common pretexts in 2026

- Wrong-number icebreakers that pivot to friendly chat.

- “Analyst/mentor” offers with screenshots of “wins.”

- Romance angles that transition into joint investing.

- “Compliance officer” or “fraud department” calls after an initial deposit, pushing for more funds to “unlock” withdrawals.

Migrating to encrypted channels

Early on, scammers push victims to encrypted or ephemeral chats. While privacy apps have legitimate uses, forced migration—especially away from platforms with stronger abuse controls—is a red flag. Once isolated, evidence fragments and platform safety teams lose visibility.

Crypto Payout Rails: Wallet Hops, Stablecoins, and Off-Ramp Abuse

The high-level flow from deposit to cash-out

Conceptually, many operations follow a predictable arc:

- Deposits: Victims are directed to send to addresses controlled by the operation.

- Consolidation: Funds move into hub wallets, sometimes via a few “peel” hops to complicate tracing.

- Swaps: Assets shift chains or convert to stablecoins to reduce volatility.

- Off-ramp: Funds exit through accounts controlled by the operation or intermediaries. Details vary; we won’t describe methods.

None of these steps are unique to crime; the pattern and context matter. High-speed movement, clustering across many victims, and synchronized timing are common.

Why stablecoins dominate

Stablecoins reduce market risk and are widely supported on exchanges and OTC desks. In a time-compressed operation, price stability and liquidity are advantages. This same liquidity is why compliance controls on stablecoin flows are a focus for regulators.

Detecting mule networks and OTC abuse

Detection is about behavior: many small deposits converging on hubs, rapid cross-asset swaps, and repeated attempts to off-ramp through newly created or lightly used accounts. Wallet clustering, shared infrastructure, and timing correlation help analysts link activity without exposing methods publicly.

Operational Security and Workforce Models: Playbooks, KPIs, and Compartmentalization

Assembly-line roles

Teams are compartmentalized. “Openers” handle first contact; “warmers” nurture daily chat; “closers” push large deposits; separate units manage wallets and payouts. Compartmentalization shields the operation—no single worker sees end-to-end details.

Metrics that drive manipulation

KPI dashboards track response latency, chat length, time-to-first-deposit, average deposit size, churn after withdrawal refusal, and block rates by platform. These metrics drive experiments: which pretexts convert, which times of day land, and which “proof” artifacts reduce skepticism.

OpSec mistakes investigators watch for

- Reused assets: profile photos, bios, landing pages, and crypto addresses.

- Timing tells: synchronized outreach windows tied to shift schedules.

- Infrastructure overlap: shared hosting, payment trails, or device fingerprints.

- Inconsistent localization: mismatched holidays, time zones, and idioms.

No operation is perfect. Small mistakes accumulate into investigative leads.

Red Flags and Detection: Signals for Victims, Telecoms, Exchanges, and Platforms

Individual red flags checklist

- Unsolicited “wrong-number” or cold outreach that quickly becomes friendly and persistent.

- Early push to move off the original app to an encrypted messenger “for privacy.”

- Promises of guaranteed returns, insider tips, or “risk-free” trading.

- Pressure to act within hours, especially outside normal business hours.

- Requests to keep the opportunity secret from friends, family, or your bank.

- Voice notes/calls that dodge independent verification or resist call-backs to official numbers.

- Withdrawal “fees,” “taxes,” or “unlock payments” required before funds supposedly release.

- Instructions to install remote software, share codes, or move funds “for safekeeping.”

Telecom and messaging indicators

- Telecom signals:

- High outbound SMS/call velocity with rotating numbers and similar content.

- Frequent number churn tied to the same device fingerprints or IP ranges.

- Low or inconsistent caller ID attestation; irregular call origin paths.

- Complaint spikes on specific ranges shortly after activation.

- Social/messaging platform signals:

- New accounts initiating many cross-border chats with similar openers.

- Rapid migration prompts to external encrypted apps.

- Reused images, bios, or link patterns across many accounts.

- Coordinated activity windows consistent with shift work.

Exchange and wallet anomaly signals

- Inbound patterns: multiple small deposits from unrelated wallets to common hubs, followed by quick consolidation.

- Movement behaviors: peel chains, rapid swaps to stablecoins, and synchronized off-ramp attempts.

- Account traits: newly onboarded users suddenly transacting at high value after interacting with known scam clusters.

- KYC/AML cues: mismatched geolocation, device, or IP histories; shared payout endpoints across “unrelated” accounts.

Countermeasures in 2026: Verification, Monitoring, and Incident Response Playbooks

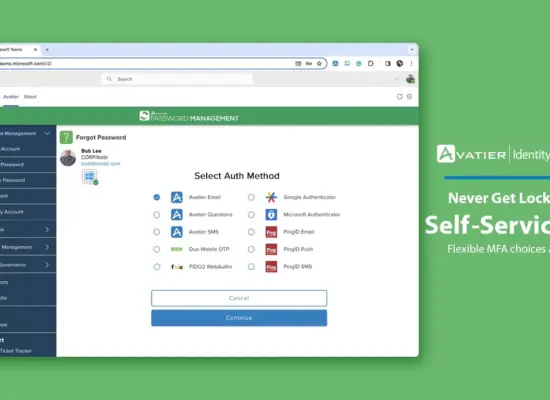

Verify identity and context, not just caller ID

- Use official channels: call back using numbers from an institution’s official website, never from a text or link.

- Check liveness and context: ask for details only a legitimate contact would know, and confirm via a second channel you control.

- Slow the timeline: require written info, cooling-off periods, and third-party review for significant transfers.

Platform defense patterns

- Friction by risk: add verification for high-velocity outreach, cross-app migration prompts, and first-time high-value transactions.

- Behavioral models: detect persona reuse, conversation trees, and synchronized campaign timing.

- Shared intelligence: collaborate across carriers, platforms, and exchanges to correlate signals and accelerate takedowns.

- Identity and payments controls: strengthen KYC, monitor for mule patterns, and apply graduated limits pending review.

What to do if you’re targeted: an action plan

- Stop sending funds. Do not pay “unlock fees,” “taxes,” or “verification deposits.”

- Preserve evidence: export chats, save call logs/voicemails, capture wallet addresses and transaction hashes, and take screenshots of profiles and websites.

- Report quickly:

- United States: file with the FTC at reportfraud.ftc.gov and the FBI’s IC3 at ic3.gov.

- European Union: report to your national cybercrime unit; Europol guidance: Europol public awareness.

- Elsewhere: contact your national police/cybercrime portal and consumer protection agency.

- Notify platforms involved: dating/messaging apps, telecom carriers, and any exchange or wallet provider you used. Provide evidence and request account flags.

- If crypto was sent, share transaction hashes with the receiving exchange if identifiable, and ask for an internal freeze review. Freezes are not guaranteed and depend on policy and timing.

- Consider fraud counseling and legal advice. Do not engage with “recovery” services promising guaranteed returns.

Recovery is uncertain. Fast, well-documented reporting improves the odds of disruption, even if funds cannot be retrieved.

Legal, Regulatory, and Reporting: Where Enforcement Is Focusing and How to File Reports

Who to contact and how to report

- US federal: FBI IC3, FTC.

- Financial intelligence: Suspicious Activity Reports via your institution; US guidance at FinCEN.

- Telecom abuse: report robocall/spoofing to your carrier; US guidance at the FCC on STIR/SHAKEN: fcc.gov/call-authentication.

- International: national cybercrime portals and Europol’s public resources: Europol.

What evidence helps investigators

- Full chat exports with timestamps and account handles.

- Call logs, voicemails, and any recorded voice notes.

- Wallet addresses and transaction hashes, including links to public block explorers.

- Receipts, bank statements showing transfers, and exchange account IDs (if applicable).

- Screenshots of websites, dashboards, and domain URLs.

Where regulation is moving in 2026

- Telecom: stronger call authentication and cross-border enforcement cooperation.

- Crypto: tighter KYC on off-ramps, beneficial ownership transparency, and sanctions screening. See policy advisories at OFAC and FinCEN advisories.

- AI media: early moves toward provenance and watermarking standards; verification-first user education from agencies like CISA and identity guidance from NIST SP 800-63.

FAQ

What is a pig butchering scam and how is it different in 2026?

It’s a social-engineering scheme that grooms victims over weeks or months before pushing high-pressure “investments.” In 2026 it’s more industrial: AI-assisted personas, SIM-based telephony at scale, and crypto payout pipelines make operations faster and more resilient.

How can I tell if a voice note or phone call is AI-generated?

No single tell is reliable. Listen for odd pauses, flat intonation, or latency—but treat urgency as the real red flag. Always verify via official numbers or a known-contact callback, not via the channel that contacted you.

Why do scammers use SIM farms and rotating numbers?

Rotation helps avoid blocks, impersonate local presence, and maintain outreach volume. It also lets operations switch identities quickly when accounts get flagged.

What are the most common crypto payout patterns used in these scams?

Victim deposits converge on hubs, flow through a few hops (“peel chains”), get swapped—often into stablecoins—and attempt to exit through controlled accounts. The specifics vary; behavior and clustering are the detection angles.

What should I do immediately if I sent crypto to a suspected scammer?

Stop sending more. Preserve evidence (addresses, tx hashes, chats). Notify your exchange or wallet support with details; ask for an internal review. File reports with the FTC and FBI IC3 (US) or your national cybercrime unit. Freezes and recovery aren’t guaranteed—speed matters.

How can exchanges and platforms detect and disrupt pig-butchering operations?

Behavioral analytics, velocity-based friction, clustering of wallets and personas, and cross-industry intelligence sharing. Flag patterns like synchronized outreach, off-platform migration prompts, peel chains, and rapid stablecoin swaps.

Can banks or crypto exchanges reverse transactions or freeze funds in time?

Sometimes, if funds hit a custodial service that cooperates and you report quickly. On-chain transfers are final; institution-led freezes are policy- and timing-dependent. Treat reversals as possible but not expected.

Key takeaways

- Pig-butchering has matured into a digital call-center model leveraging voice AI, SIM rotation, and crypto rails.

- Voice deepfakes raise pressure and plausibility; verification via official channels beats artifact-spotting.

- SIM farms enable scale and local presence; telecom and platform collaboration is critical for disruption.

- Crypto flows often show hub consolidation, peel chains, stablecoin swaps, and synchronized off-ramp attempts.

- Individuals should watch for urgency, secrecy, and channel migration; never share codes or install remote tools on request.

- Platforms should deploy risk-based friction, behavioral models, and shared intelligence to cut campaigns off mid-funnel.

- If targeted, stop, preserve evidence, and report quickly to platforms and authorities; recovery is uncertain, speed helps.