Last Updated on January 28, 2026 by DarkNet

By 2026, data brokers don’t need third‑party cookies to know a lot about you. This guide explains how dossiers are built—and how to push back using legal, defensive tools.

What Changed by 2026: The Data Broker Economy After Cookies, ATT, and Privacy Laws

Third‑party cookies faded, mobile tracking tightened, and privacy laws grew. Yet the data-broker economy adapted. Today, identity is built from device IDs, logins, and purchase/location trails—often labeled “pseudonymous,” but frequently linkable to you.

Cookie decline vs. ID replacement: MAIDs, hashed emails, and clean rooms

- MAIDs (Mobile Advertising IDs): Device-specific ad IDs (e.g., AAID on Android, IDFA-alternatives when allowed on iOS). They can be reset, but many apps still collect them unless you limit tracking.

- Hashed emails/phone numbers: A cryptographic hash of your email or phone appears “anonymous,” yet brokers match it back to you because they also hash the same data from other sources. Example: yourname@email.com → hash → same across vendors.

- Clean rooms: Environments where parties compare overlapping audiences without sharing raw data. Useful for measurement but can strengthen cross‑platform linkage.

Regulatory patchwork: what laws usually cover (and what they don’t)

- Many jurisdictions provide rights to access, delete, or opt out of sales/targeting—but scope varies.

- Public‑record data, fraud-prevention uses, or aggregated data may be carved out.

- Enforcement differs; timelines range from weeks to months. Expect verification steps.

Why brokers still thrive: consent banners, SDK defaults, and “legitimate interest”

- Consent banners and dark patterns can push “Accept All.”

- SDKs bundle data collection by default; app developers may not fully audit them.

- Some actors lean on broad interpretations of “service provider,” “processing,” or “legitimate interest.”

From “Anonymous” to Identified: How Identity Graphs Stitch Your Devices and Accounts Together

An identity graph maps identifiers—emails, MAIDs, device fingerprints, IPs, purchases—into a profile. Even without your name, patterns link back to you or your household.

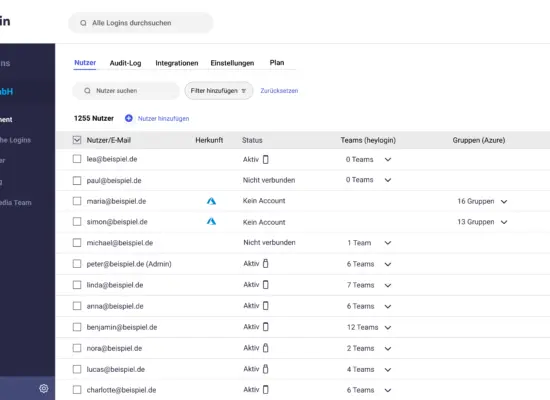

Deterministic linking: logins, emails, phone numbers, and payment tokens

- Logins: Using the same email across apps connects activity instantly.

- Phone numbers: Common as account recovery and loyalty IDs; strong match signal.

- Payment tokens: Card‑network tokens and merchant accounts can anchor recurring purchases to a profile.

Probabilistic linking: co-location, behavior patterns, and device fingerprints

- Devices seen on the same networks at the same times get grouped into households.

- Time‑of‑day usage and travel routes help infer who’s who.

- Device fingerprints combine settings, fonts, hardware hints; anti‑fingerprinting helps, but it’s an arms race.

Common ID artifacts: MAID/AAID, IDFV, hashed PII, household IDs

- MAID/AAID: Mobile ad ID on Android; used for attribution and targeting unless restricted.

- IDFV: Identifier for Vendor (iOS) ties your apps from the same developer together.

- Hashed PII: Hashed emails/phones are still linkable across partners that hash the same inputs.

- Household IDs: Co‑travel, IP address patterns, and purchases from the same home create household‑level profiles.

Location Data in 2026: How Pings, Wi‑Fi, and Geofences Become a Movement Profile

Location isn’t only GPS. It’s Wi‑Fi SSIDs, Bluetooth beacons, and cell towers—plus check‑ins and photos. Combined, they reveal routines and sensitive places.

Sources of location: GPS, Wi‑Fi SSIDs, Bluetooth beacons, cell signals

- Apps can sample GPS, query nearby Wi‑Fi names, and scan Bluetooth beacons for proximity.

- Even when “precise” is off, coarse location and network metadata can still place you in neighborhoods or venues.

Geofencing and POI inference: visits to clinics, workplaces, and religious sites

- “Points of Interest” (POIs) map lat/long areas to real places; repeated pings within a polygon are treated as a visit.

- Some jurisdictions restrict sensitive-location use; practices still vary.

Re-identification risks: uniqueness of routes, night-time home inference

- Most people have unique commute patterns; nighttime location often reveals home.

- Combining even coarse trails with other identifiers can re‑identify “anonymous” location data.

Purchase Trails: Card Transactions, Loyalty Programs, Email Receipts, and Data Co-ops

Transactions talk. Brokers infer demographics, interests, and life events from when, where, and what you buy—even without exact dollar amounts.

Card and bank-derived insights: merchant category, frequency, and spend bands

- Merchant Category Codes (MCCs) plus visit frequency reveal hobbies and routines.

- Spend ranges (e.g., “$50–$100 weekly”) fuel propensity scores without exposing full statements.

Loyalty IDs and “discount for data” dynamics

- Phone numbers, emails, or QR codes at checkout tie purchases to a profile in exchange for a discount.

- Some programs share de‑identified data with “partners” that can later be re‑linked.

Email receipt parsing and shopping apps: how SKU-level data leaks

- Inbox‑scanning features can extract SKU‑level purchase details; disable if not needed.

- Shopping and coupon apps may join data co‑ops—read their privacy controls before connecting accounts.

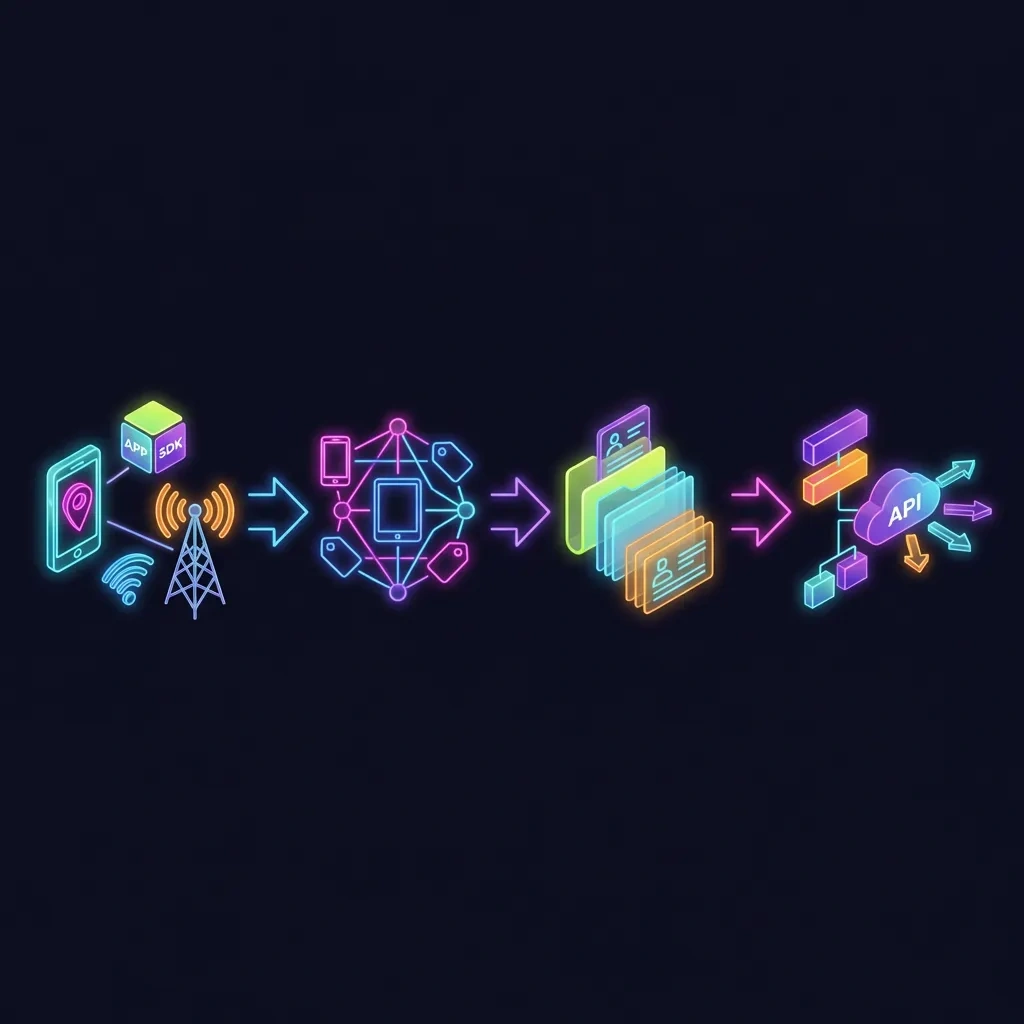

The Dossier Pipeline: Collection, Enrichment, Scoring, Packaging, and Resale

Data moves through a repeatable pipeline. Each step increases the chance it links back to you, even if labels say “pseudonymous.”

Data normalization: dedupe, canonicalization, and confidence scores

- Records from apps, ad auctions, and partners are cleaned and deduplicated.

- Confidence scores decide whether two identifiers represent the same person or household.

Enrichment: demographics, interests, propensity, and lookalike audiences

- Additional attributes get inferred or appended: age bands, home type, interests, “likely to move,” etc.

- Lookalikes expand reach by finding similar profiles to a seed group.

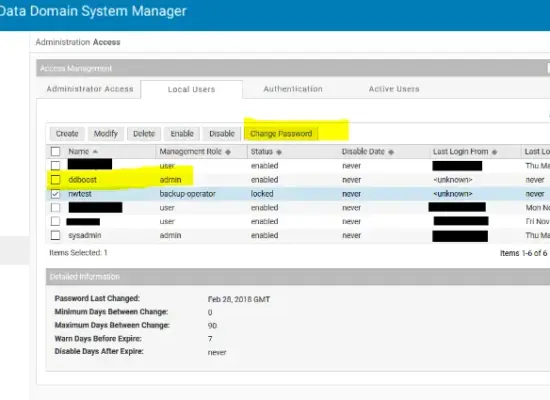

Packaging: segments, leads, background-style reports, and API access

- Outputs range from ad-targeting segments to lead lists, “background-style” compilations, and API endpoints.

- Access controls vary; misuse risk rises when APIs expose rich attributes at scale.

Simple diagram: data-broker pipeline

[Collection] ↓ (App telemetry, MAIDs, hashed emails/phones, location pings, purchases) [Identity graph] ↓ (Linkage: deterministic + probabilistic; householding; confidence scores) [Dossier] ↓ (Enrichment: demographics, interests, risk/propensity, segments) [Resale & Activation] ↓ (Ad platforms, partners, APIs, reports, clean rooms)

Where the Data Comes From: Apps, SDKs, Ad Tech, ISPs, and “Partner” Sharing

Follow the supply chain and you’ll see why reductions—not miracles—deliver the best privacy gains.

SDK supply chains and why “free” apps monetize via data resale

- Analytics, ads, and crash-reporting SDKs can collect identifiers beyond what the app itself needs.

- Developers may inherit sharing through SDK terms they didn’t negotiate.

Ad exchanges and real-time bidding side effects

- Real‑time bidding can broadcast device IDs, coarse location, segments, and page context to multiple parties—even if you don’t click an ad.

- Some browsers now partition or restrict third‑party storage to reduce cross‑site tracking.

“Partners” and “service providers”: contract language that enables sharing

- Privacy policies often list “partners” and “service providers” who may receive data for processing, analytics, or marketing.

- Depending on the contract and law, some sharing may be permitted unless you opt out.

How to See What’s Out There: Self-Audits, Broker Lookups, and Exposure Signals

You can’t protect what you can’t see. Start with your own identifiers and obvious leakage points, then move to formal requests.

Finding your identifiers: ad IDs, data in account dashboards, email aliases

- On phones: Note your mobile ad ID or ensure it’s disabled/reset (see official OS guidance below).

- In major accounts: Check privacy dashboards for ad personalization signals, inferred interests, and location history.

- Emails: If you use aliasing (e.g., plus‑addressing or “Hide My Email”), list which alias was used where. That helps trace leaks.

Broker directories and opt-out aggregators: pros and cons

- Some regulators publish broker lists or rights portals. Aggregators can save time but may require extra data; weigh the tradeoffs before using any service.

- Direct requests to larger, well‑known brokers often deliver the biggest early wins.

Red flags: spam patterns, scam targeting, and precision ad personalization

- New spam bursts after signing up somewhere, or highly specific ads soon after a visit or purchase, can indicate fresh data flows.

- Track timing: note what changed in the last 1–2 weeks and which identifiers you used.

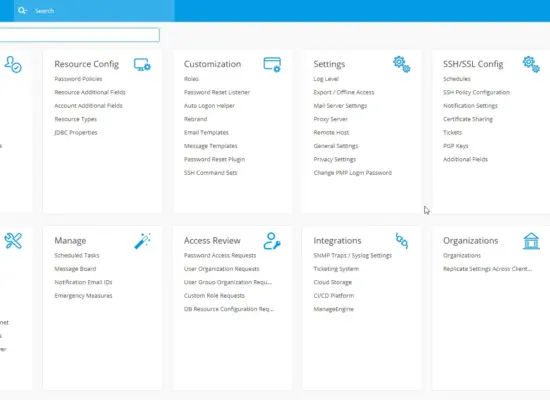

Fight Back, Part 1: Opt-Outs, Deletions, and Access Requests That Actually Work

Target high‑impact brokers first, minimize what you share during verification, and document everything. Expect to repeat periodically.

Step-by-step opt-out workflow: prioritize biggest brokers first

- Identify major brokers likely to hold your data (marketing, people‑search, location). Submit access, deletion, or opt‑out requests where law allows.

- Repeat for niche brokers tied to your interests, apps, or region. Calendar follow‑ups.

- Keep a log: request date, confirmation numbers, promised timelines.

Identity verification safely: minimizing extra data during requests

- Provide only what’s necessary to verify identity (e.g., the email/phone they already have).

- If allowed, submit redacted IDs and watermark scans “For privacy request use only.”

- Use secure channels offered by the company; avoid sending sensitive info over email if a secure portal exists.

When to escalate: complaints, appeals, and documented follow-ups

- If a response is overdue or incomplete, send a polite follow‑up referencing your ticket.

- If unresolved, file complaints with appropriate authorities in your jurisdiction. In the U.S., the Federal Trade Commission accepts reports at reportfraud.ftc.gov.

- Keep copies of all communications and screenshots of policies at the time of your request.

Fight Back, Part 2: Reduce Future Data Leakage on Phones, Browsers, and Accounts

Dial down exposure across the layers you control: device, browser, accounts, and networks. Don’t chase perfection—pick sustainable defaults.

Phone settings: location permissions, background access, ad measurement toggles

- Location: Set most apps to “While Using,” turn off “Precise Location” when not needed, and review which apps have background access quarterly.

- Wi‑Fi/Bluetooth scanning: On many Android devices, disable “Wi‑Fi scanning” and “Bluetooth scanning” if you don’t need them for non‑GPS location.

- Ad ID and tracking: Reset or delete your mobile ad ID and limit ad personalization.

- Apple iOS: control app tracking permissions with App Tracking Transparency (see Apple’s guidance at support.apple.com/en-us/HT212025).

- Android: manage/reset the Advertising ID and “Ads” settings (see Google’s developer/user guidance at support.google.com/googleplay/android-developer/answer/6048248).

- Background refresh: Limit background activity for apps that don’t need it.

Browser defenses: tracker blocking, cookie partitioning, anti-fingerprinting tradeoffs

- Tracker blocking: Use built‑in protections and content blockers. Firefox’s Enhanced Tracking Protection details: support.mozilla.org.

- Cookie settings: Prefer “block third‑party cookies” or partitioning. Chrome cookie controls: support.google.com/chrome/answer/95647.

- Anti‑fingerprinting: Protective features can slightly break sites; consider per‑site exceptions for critical services.

Account hygiene: alias emails, burner numbers, and minimizing loyalty linkage (lawful use only; no fraud)

- Aliases: Use unique email aliases for signups. Apple’s “Hide My Email” guidance: support.apple.com/en-us/HT210425.

- Phone numbers: Consider secondary numbers for signups where permitted. Do not misrepresent identity or violate terms.

- Loyalty programs: Join only those you value; opt out of data sharing where options exist. It’s okay to skip the discount if the data tradeoff isn’t worth it.

- Cloud histories: Review ad personalization and location activity in your major accounts and turn off features you don’t need (see provider help centers).

Realistic Expectations: What You Can’t Fully Stop and How to Maintain a Privacy Routine

Privacy is risk reduction, not invisibility. You can meaningfully cut exposure, but some flows continue through public records, household inference, and essential services.

Residual data: public records, data already resold, and household inference

- Public data (e.g., property records) and legally required disclosures persist.

- Previously resold data may live in downstream systems for months or longer.

- Even if you minimize your own signals, other household members’ devices can reintroduce linkage.

Maintenance cadence: quarterly checks, new app reviews, and breach monitoring

- Quarterly: review app permissions, ad settings, and opt‑out status with major brokers.

- Before installing new apps: skim permissions and privacy policies; prefer apps that work without precise location or contact syncing.

- For identity theft risk: in the U.S., monitor your credit and learn about free reports via the FTC at consumer.ftc.gov.

Privacy vs. usability: choosing a sustainable threat model

- Decide which conveniences are worth the data cost; configure defaults accordingly.

- Use private modes or secondary browsers for sensitive research; keep one browser for logins to reduce cross‑contamination.

FAQ

Short, practical answers to common questions about data brokers and defensive privacy steps.

- Are “anonymous” IDs like MAIDs or hashed emails really anonymous?

Not reliably. MAIDs are device‑level and linkable across apps. Hashed emails/phones can be matched by any party hashing the same input. Treat them as pseudonymous, not anonymous. - Can I delete my data from every broker?

No. You can reduce a lot, but you won’t purge everything. Laws vary, some uses are exempt, and data may re‑enter from new sources. Think in cycles: request, verify, re‑check. - What about location bans in sensitive places?

Some jurisdictions limit collection or use of sensitive‑location data. Compliance and enforcement vary; still restrict app permissions and background access yourself. - Does resetting my mobile ad ID stop tracking?

It helps, but apps may create new linkages via logins or device traits. Combine resets with limiting tracking permissions and reviewing app access. - Is using a VPN enough to block brokers?

No. VPNs hide IPs from some parties, but apps and sites still see identifiers, logins, and device signals. Use VPNs as one layer, not a cure‑all. - Should I use separate emails and browsers?

Yes, if you can manage it. Separate contexts reduce cross‑linking. Use distinct email aliases and keep a “shopping/browser” separate from a “research/private” browser. - How long do opt‑outs take?

Often weeks to a few months. Some respond quickly; others batch updates. Keep confirmations and check back periodically. - Can I force a company to disclose its data sources?

In some places, you have a right to know categories of sources. Exact vendors may not be listed. Use your rights where available and review privacy policies for partner categories.

Glossary

- Identity graph: A database linking identifiers (emails, MAIDs, IPs, devices) into person/household profiles with confidence scores.

- MAID (Mobile Advertising ID): A device‑specific advertising identifier (e.g., AAID). Resettable; often used for attribution and targeting.

- RTB (Real‑Time Bidding): Automated ad auctions where bid requests can share device and context data with multiple parties.

- Enrichment: Appending extra inferred or purchased attributes (demographics, interests, propensities) to a profile.

- IDFV: iOS Identifier for Vendor, linking apps from the same developer on a device.

- Hashing: Transforming data (e.g., email) into a fixed string. Not encryption; matching is possible if others hash the same input.

- Householding: Grouping individuals/devices into a shared household based on co‑location and shared identifiers.

Prioritized checklist

Use this three‑tier plan to cut exposure quickly and build a sustainable routine.

Quick wins (15 minutes)

- On your phone, set most apps’ location to “While Using” and disable “Precise” for non‑maps apps.

- Reset or delete your mobile ad ID and limit ad personalization (see Apple and Android guidance linked above).

- Switch your main browser to block or partition third‑party cookies; enable tracker blocking.

- Turn off inbox‑scanning purchase features you don’t use.

Weekend actions

- Submit access/deletion/opt‑out requests to the largest data brokers you identify; document confirmations.

- Audit app permissions (camera, contacts, photos, Bluetooth, motion sensors) and remove apps you don’t use.

- Create unique email aliases for high‑risk signups and update accounts that share an email unnecessarily.

- Review ad and location histories in your major accounts; pause or delete where allowed.

Ongoing maintenance

- Quarterly: re‑review app permissions, browser settings, and key broker opt‑out status.

- Before installing new apps: check permissions and privacy choices; prefer minimal data collection.

- Track major purchases and new memberships; watch for spam/ad spikes afterward.

- In the U.S., review guidance on free credit reports via the FTC: consumer.ftc.gov.

Key takeaways

- Data brokers thrive post‑cookies by linking MAIDs, hashed emails/phones, location, and purchases.

- “Anonymous” often means “pseudonymous”—linkable with enough signals.

- Opt‑outs plus device/browser hardening deliver meaningful, lawful risk reduction.

- Prioritize big brokers and high‑leakage apps; document requests and verify results.

- Limit precise location, background access, and cross‑context identifiers.

- Use aliases and separate contexts to reduce linkage across services.

- Expect residual data; maintain a quarterly privacy routine.

- Use official resources for tracking controls and escalation when needed.